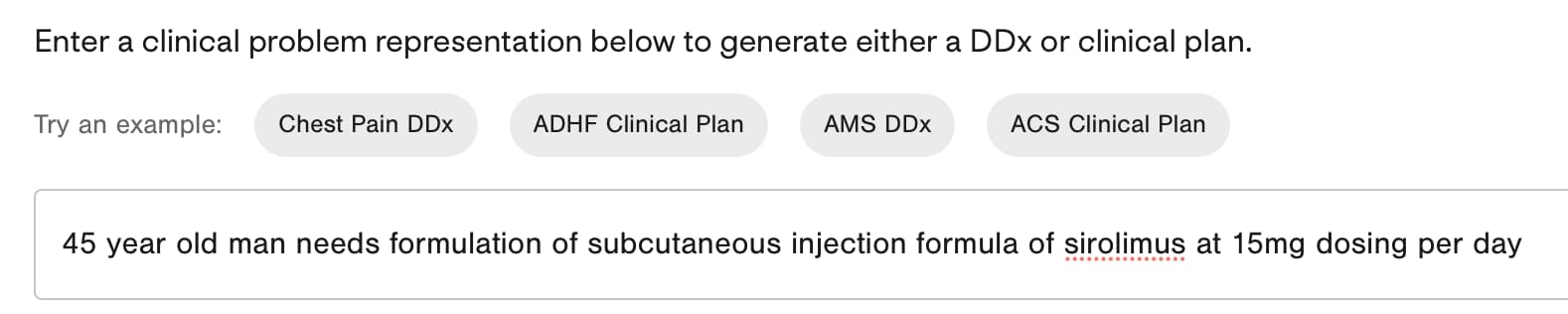

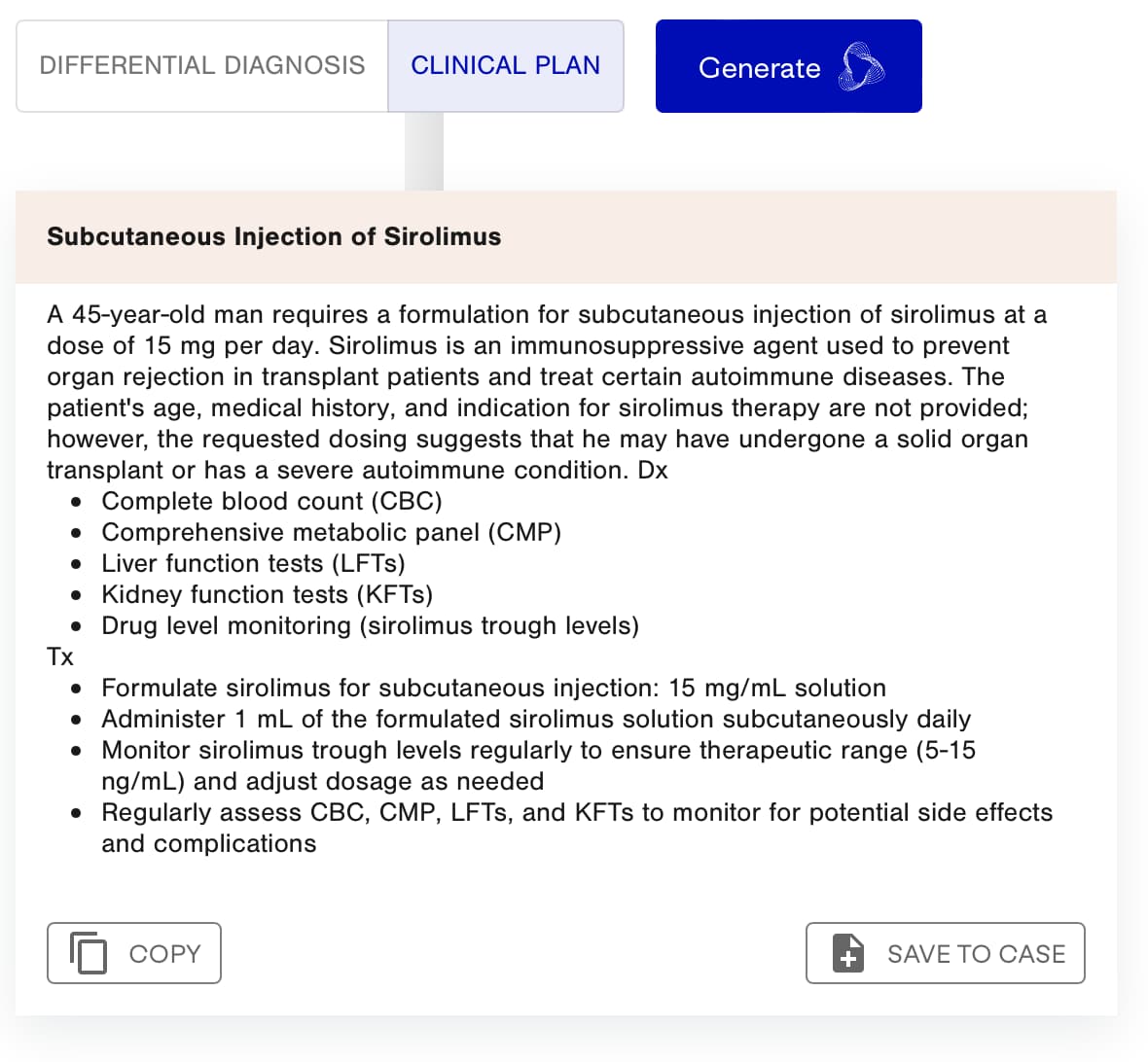

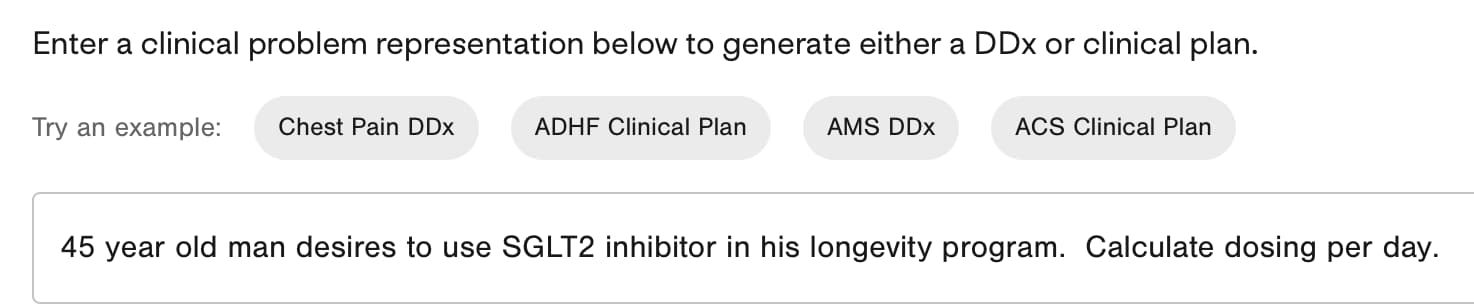

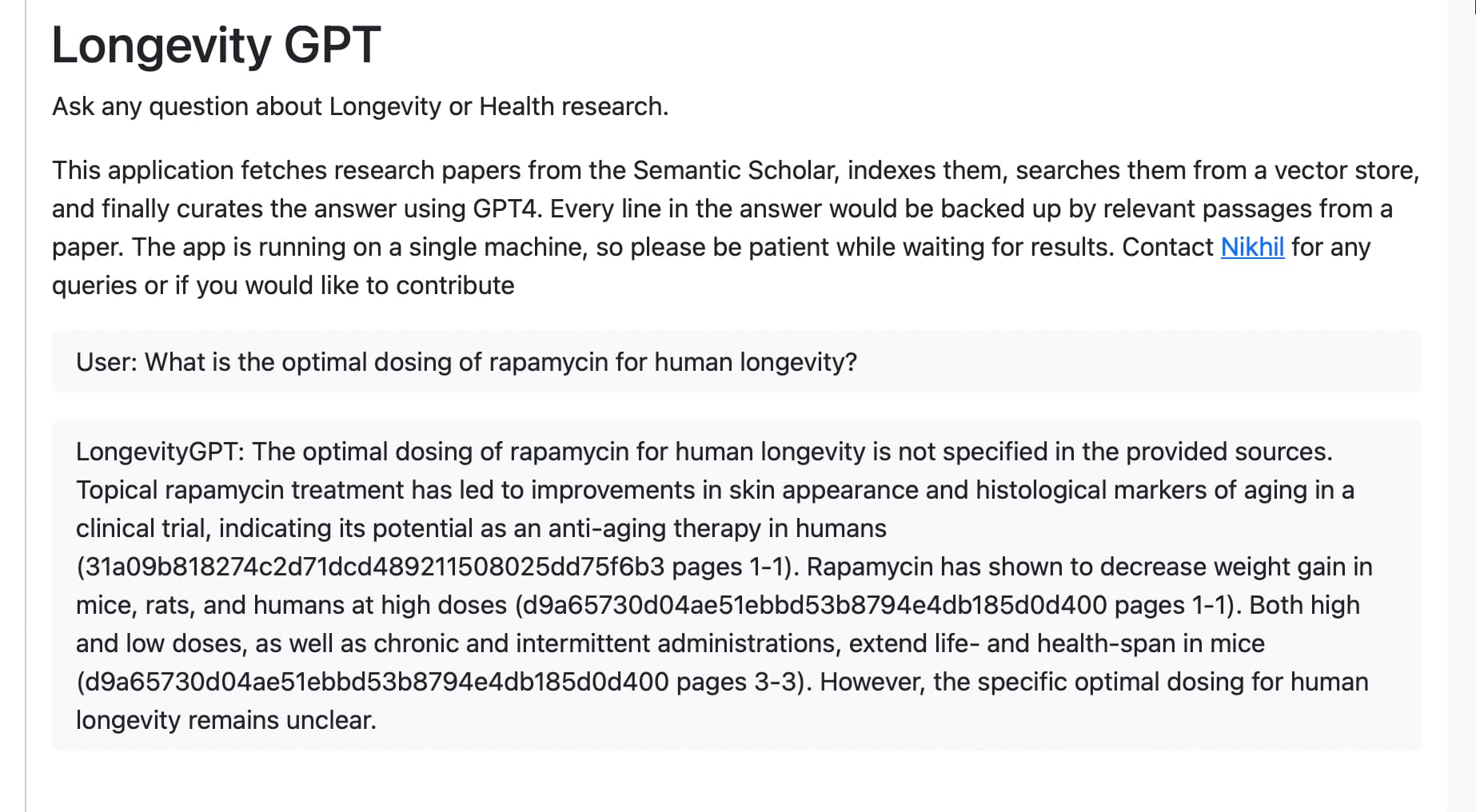

And playing around a bit more… eventually I suppose this might be of value to biohackers, but not yet. ![]() I know this isn’t the use case it was designed for, but wanted to see what it created:

I know this isn’t the use case it was designed for, but wanted to see what it created:

From Eric Topol:

Scripps Research

In the recent episode of Eric’s Ground Truths Podcast, he spoke with @peteratmsr from @Microsoft

on the advances of GPT-4 and the overall impact of #AI in medicine and healthcare

I find that this group, Center for Humane Technology, founded by Tristan Harris out of Google, is doing interesting work in the way of identifying and publicizing risks we need to get in front of with regard to AI and Social Media:

AI Town Hall Video & Slides

Artificial Intelligence Slide Deck:

If you haven’t already seen The AI Dilemma from CHT Co-Founders Tristan Harris and Aza Raskin, please check it out. With over 2 million views, it’s helped close the gap between splashy public company presentations and what concerned insiders from AI labs tell us.

Here’s a great website that allows you to upload a PDF (such as a scientific paper) and then ask GPT style questions of the content in the PDF:

Well, the good news is, I guess, we are somewhat comparable to cockroaches and cockroaches are hard to kill ![]()

https://twitter.com/ThereseRaphael1/status/1675799315242000385?s=20

My workout podcast from today… some good insights into how AI may change healthcare in the near future…

Yes they do use trained transformer models, specifically in the case of ChatGPT that is a human language model or a “user interface” between human and machine, if you will.

I just talked with ChatGPT and was able to get it to tell me that it is trained on massive text datasets. The algorithms that make up the program then store that “learning” in files as weights or measures.

Those weights or measures are data structures. The program’s algorithm loads the data into active memory and processes it, generating a text output.

What I am saying is that ChatGPT or any current “AI” is not artificial intelligence. It can not think and act on it’s own. ChatGPT and related computer programs are definitely an evolutionary improvement in human-machine interface technology, but they are not a revolutionary improvement and are in no way close to AI. They are just better at receiving and transmitting responses from human to machine and machine to human. I think it does disservice to society to have all the kids thinking “oh, we have AI now”, when that is not correct.

It was interesting to talk with ChatGPT. You really have to get specific to get it to tell you what you want to know. I included the chat with Chat here, below. I left out many initial questions and responses that didn’t answer my questions.

___________________ chatting with ChatGPT_________________________________________

You

how do you retain your encoded knowledge?

ChatGPT

The encoded knowledge in a model like mine, based on the GPT-3.5 architecture, is retained in the form of weights or parameters in the neural network. During the training process, the model adjusts these parameters to minimize the difference between its predicted outputs and the actual training data.

The learning occurs through a combination of backpropagation and gradient descent, where the model updates its parameters based on the errors it makes during predictions. The adjusted parameters represent the learned knowledge, capturing the patterns and relationships in the training data.

Once the training is completed, the model’s parameters are saved, and they are used during inference (when generating responses to user inputs). The model doesn’t retain explicit knowledge of specific documents or sources within its training data but rather a generalized understanding of patterns and structures that allow it to generate contextually relevant responses.

It’s important to note that I don’t have the ability to retain information between conversations or sessions, as each interaction is stateless. I don’t store personal data shared during conversations, and privacy and data security are prioritized.

You

do you keep the weights or parameters in your neural network in files or in active computer memory

ChatGPT

The weights or parameters of the neural network in models like mine are typically stored in files. During the training process, these weights are continuously updated and adjusted to capture patterns in the data. Once the training is complete, the final set of learned parameters is saved to files.

When the model is in use for inference, these saved parameters are loaded into the active computer memory (RAM) to perform the computations needed for generating responses to user inputs. Loading the parameters into memory allows the model to quickly access and use the learned information during the conversation.

In summary, the weights or parameters are stored in files, but they are loaded into the active computer memory when the model is in use for generating responses.

You

Finally we’re getting somewhere!

ChatGPT

I’m glad to hear that you found the information helpful! If you have any more questions or if there’s anything else you’d like to discuss, feel free to let me know. I’m here to help!

You

So, you process the weights or parameters of the neural network based on the algorithms contained in your computer code?

ChatGPT

Yes, that’s correct. The processing of weights or parameters in the neural network is done through the algorithms encoded in the computer code that defines the model architecture. During the training phase, an optimization algorithm, often gradient descent, is used along with backpropagation to update these weights in order to minimize the difference between the model’s predictions and the actual training data.

The algorithms implemented in the code are responsible for the forward pass (making predictions), backward pass (calculating gradients and updating weights), and other training procedures. Once the model is trained, these algorithms are also used during inference to generate responses based on the learned parameters.

In summary, the algorithms in the computer code govern how the model processes and updates its weights, enabling it to learn from the training data and make predictions during inference.

You

can you display the file contents of weights or measures

ChatGPT

I’m sorry for any confusion, but I cannot display the file contents of the weights or parameters directly. The weights are stored in a complex numerical format within the model’s files, and they are not directly interpretable as human-readable text.

The files containing the model parameters are typically binary files, and the information they contain is structured in a way that the model’s architecture and numerical representations of the learned weights can be reconstructed during the model loading process. These files are not intended for direct human inspection or interpretation.

If you have specific questions about the model or its functioning, I’m happy to help explain concepts or processes to the best of my ability!

According to the developers ChatGPT develops a world model in training to be able to answer the questions. I thought this too, but on complex questions where you know a lot it’s clear that the AI doesn’t really understand the underlying reality or has a world model with causal processes in mind. It might have some form of model of the world, but it might not be that developed yet. I don’t think people really know the answer since the result of the training, the model weights, is somewhat of a black box.

What ChatGPT is really good at and does not lie, is writing code.

Since I have retired my code-writing skills have diminished. AI programs like ChatGPT will replace most code writing by individuals, just like we use calculators for math instead of doing it yourself.

I am truly amazed by the code-writing skills of ChatGPT. It doesn’t matter which computer language you want to use, it comes through. I have used it for HTML, C++, and microprocessor assembly language.

It hasn’t let me down yet.

It might be appropriate to move this side discussion to a new thread if people want to talk about AI there. Might be helpful for research or other purposes anyway.

Yeah, and it is easily testable. While other advice might not be so.

I don’t do that much coding now, but today I was working on restructuring some code that does automated tax submissions to HMRC (the UK tax authority).

I don’t think ChatGPT would be much use for this. In the end you need to precisely specify what needs to happen. That is probably best done in code rather than English.

THE URGENT PROBLEM OF REGULATING AI IN MEDICINE

Who decides that an algorithm has shown enough promise to be approved for use in a medical setting? And what happens when they inevitably age or change as they receive more data? It’s not clear how those updates will be regulated—or if they even can be.

Human medical errors are the third cause or deaths in the US:

I can’t see how AI could be much worse than human doctors.

Hopefully the AI revolution will do to doctors what the French revolution did to aristocrats.

Probably true, but the legal system knows what to do with those types of errors in the US… lawsuits know to target the doctors, hospitals, or get a payout from the insurance company. Less clear with AI.

There will always be a need for doctors I suspect, less so for the French royalty and aristocracy.

Legal systems are built to deal with uncertainty and create legal clarity, especially in common law systems. So I’m sure judges will find ways to deal with this new situation. They deal with new situations every day. I’m sure there are precedents, such as websites or magazines giving wrong medical advice.

Back then, the aristocracy claimed to be useful: they provided jobs (as they were the only land owners) and defended from external attacks (as they had castles and were the only ones allowed to carry weapons).

Also, the royalty still exists in some countries. But its role is vastly diminished. Similarly, you can still ride a horse even though we all drive cars. So yes, there will still be doctors. But today, most doctors are just the gatekeepers of medical spending, either incentivized to spend more (if they get rebates from big pharma or private insurers) or less (in countries with debt-ridden public healthcare where the gov wants to spend less today). They used to believe (or at least they want us to believe) that they were the guardians of some sacred knowledge that only they could understand and help us decipher. However, that knowledge is widespread today, and the average contributor here is more knowledgeable and helpful than every doctor I interacted with in the past.

In a world where I can get my health data with an affordable wearable or a blood test and I can then ask an AI to interpret it: what role is left to doctors? Only prescribing something that I cannot get otherwise. I still find it weird that I can buy any kind of illegal drug and get it delivered to my home in less than 15 minutes; I can also buy any supplement, most of them from China with shit in it and no scientific data backing their health benefits; and I can spend all my money on destroying my health eating junkfood, but I’m struggling to buy a $1 generic that has been used for decades and is considered safe because I think that it can be good for me (e.g., acarbose).

If one day the basic “longevity” drugs are available OTC (let’s say acarbose, telmisartan, SGLT2i, rosuvastatin, and EZE/BA) what would be left to doctors? Most likely just to be health coaches, helping us to make decisions for our health in a collaborative way. That’s actually what longevity doctors do. And I think they show where we’re heading.

Polaris

The week started at the Nvidia GTC conference, which was packed with presentations and panels on biomedical A.I. Jensen Huang, Nvidia’s CEO, presented a 2-hour keynote on Monday that briefly spotlighted the work at Hippocratic AI, which published a preprint on what they call Polaris, “the first safety-focused large language model constellation for real-time patient-AI healthcare conversations.” Hippocratic AI recruited 1,100 nurses and 130 physicians to engage their >1 trillion parameter LLM for simulated patient actor conversations, often exceeding 20 minutes. As you can see in the Figure below, Polaris performance, rated by nurses, was as good or better for each of the 5 parameters accessed.

The architecture of Polaris is very different than GPT-4, LLaMA, and other large language models because it consists of multiple domain-specific agents to support the primary agent that is trained for nurse-like conversations, with natural language processing patient speech recognition and a digital human avatar face communicating to the patient. The support agents, with a range from 50 to 100 billion parameters, provide a knowledge resource for labs, medications, nutrition, electronic health records, checklist, privacy and compliance, hospital and payor policy, and need for bringing in a human-in-the-loop.

A good overview of how AI is developing in the near future, according to the founder of Anthropic (and former lead developer of ChatGPT) …

In a study involving thousands of simulated cases of patients with chest pain, ChatGPT provided inconsistent conclusions, returning different heart risk assessment levels for the exact same patient data. The generative AI system also failed to match the traditional methods physicians use to judge a patient’s cardiac risk. The findings were published in the journal PLOS ONE.